Europe Rejects AI Rulebook Amidst Trump Administration Pressure

Table of Contents

European Union's Stance on AI Regulation

The Proposed AI Rulebook

The proposed AI rulebook, a comprehensive piece of legislation, aimed to establish a unified regulatory framework for artificial intelligence within the European Union. Its objectives included promoting the ethical development of AI, mitigating risks associated with its use, and fostering trust in AI systems. The scope of the rulebook was broad, encompassing various sectors, including healthcare, finance, and transportation.

- Data Privacy Rules: Stricter data protection measures, building upon the GDPR (General Data Protection Regulation), were proposed to ensure the responsible handling of personal data used in AI systems.

- Algorithmic Transparency: The rulebook called for increased transparency in algorithms, enabling users to understand how AI systems make decisions and ensuring accountability.

- Liability Frameworks for AI-driven Systems: The establishment of clear liability frameworks was central, addressing issues of responsibility when AI systems cause harm.

These EU AI regulations aimed to create a robust "European AI framework," prioritizing "AI accountability in Europe."

Reasons for the Strict Approach

Europe's stringent approach to AI regulation stems from a deep concern for ethical considerations, data protection, and the prevention of algorithmic bias. The influence of GDPR is significant, setting a precedent for robust data protection that extends to AI applications. The EU seeks to avoid the potential pitfalls of AI, including unintended consequences.

- AI Bias Against Minorities: Concerns about AI systems perpetuating existing societal biases against marginalized groups were central to the EU's approach.

- Job Displacement: The potential for widespread job displacement due to automation driven by AI was another significant concern.

- Potential Misuse of AI in Surveillance: The EU is wary of the misuse of AI for mass surveillance and erosion of civil liberties.

This focus on "ethical AI development" and "responsible AI" prioritizes "data protection and AI" above all else.

The Trump Administration's Counter-Position

The American Approach to AI

The Trump administration, in stark contrast to the EU, favored a largely hands-off approach to AI regulation, prioritizing minimal government intervention and market-driven solutions. This preference for "deregulation of AI" reflected a belief that the free market would naturally regulate itself, fostering innovation without the need for extensive government oversight.

- Limited Federal Regulation: The Trump administration largely refrained from enacting sweeping federal regulations on AI, preferring to focus on promoting research and development.

- Emphasis on Industry Self-Regulation: The administration encouraged industry self-regulation and the development of ethical guidelines by private companies rather than imposing stringent government rules.

- Focus on Economic Competitiveness: The administration emphasized the importance of maintaining US economic competitiveness in the global AI race, arguing that excessive regulation could stifle innovation.

This constituted the "US AI policy," a strategy prioritizing "American AI strategy" through minimal intervention.

Arguments Against Strict AI Regulation

The Trump administration and its supporters argued that stringent AI regulations would stifle innovation and hinder economic competitiveness. They believed that overregulation could stifle the development of groundbreaking AI technologies, slowing technological advancement and economic growth.

- Inhibition of Innovation: Opponents argued that excessive regulation would discourage investment in AI research and development, slowing the pace of progress.

- Loss of Economic Competitiveness: They also warned that stringent regulations could place US companies at a disadvantage compared to those in countries with less restrictive policies, hindering economic growth.

- Unintended Negative Consequences: Concerns were raised that poorly designed regulations could lead to unforeseen negative consequences, undermining the very goals they were intended to achieve.

The arguments centered on "AI innovation" and maintaining a "competitive advantage in AI" to reap the "economic benefits of AI."

The Transatlantic Divide and its Implications

Differing Regulatory Philosophies

The divergence between the EU and US approaches to AI regulation reflects fundamental differences in their regulatory philosophies. The EU prioritizes ethical considerations, data protection, and human rights, while the US emphasizes economic growth and market-driven solutions.

- EU Emphasis on Human Rights: The EU's approach emphasizes protecting individual rights and freedoms, even at the potential cost of slower technological progress.

- US Focus on Economic Growth: The US, on the other hand, prioritizes fostering economic growth and technological innovation, even if it entails some risks.

This "EU-US AI divergence" raises serious questions regarding the creation of "international AI standards" and effective "global AI governance."

Future of AI Regulation

The conflict between the EU and the US over AI regulation highlights the challenges of establishing global AI governance. While the Biden administration may shift towards a more regulated approach, the fundamental differences in regulatory philosophies will likely persist.

- National Level Regulations: Different countries will likely adopt varying levels of AI regulation, leading to a fragmented global regulatory landscape.

- International Cooperation: Increased collaboration and negotiation will be essential to establish international standards that balance innovation with ethical considerations.

- Evolving Landscape: The landscape of AI regulation will continue to evolve as AI technologies advance and their societal impacts become more apparent.

The "future of AI regulation" will likely involve finding a balance between fostering "international AI cooperation" and creating effective "global AI standards."

Conclusion: Europe Rejects AI Rulebook: A Turning Point in Global AI Governance

This analysis of "Europe Rejects AI Rulebook Amidst Trump Administration Pressure" has revealed a significant transatlantic divide in approaches to AI regulation. The EU's prioritization of ethical considerations and data protection contrasts sharply with the US's emphasis on minimal government intervention and market-driven solutions. Europe's rejection of the proposed rulebook underscores this tension, highlighting the ongoing challenge of balancing the promotion of innovation with the need to ensure ethical and responsible AI development. The long-term implications are significant, affecting international cooperation and the shaping of global AI governance. Stay informed about the evolving landscape of AI regulation in Europe and the US. Continue your research on the differences between European and American approaches to AI governance. The debate surrounding the "Europe Rejects AI Rulebook" issue is far from over, and understanding its nuances is critical for navigating the future of artificial intelligence.

Featured Posts

-

Is Betting On Natural Disasters Like The La Wildfires A Sign Of The Times

Apr 26, 2025

Is Betting On Natural Disasters Like The La Wildfires A Sign Of The Times

Apr 26, 2025 -

Greenland False News Denmark Points Finger At Russia In Growing Us Dispute

Apr 26, 2025

Greenland False News Denmark Points Finger At Russia In Growing Us Dispute

Apr 26, 2025 -

Nintendo Switch 2 Preorder My Game Stop Line Experience

Apr 26, 2025

Nintendo Switch 2 Preorder My Game Stop Line Experience

Apr 26, 2025 -

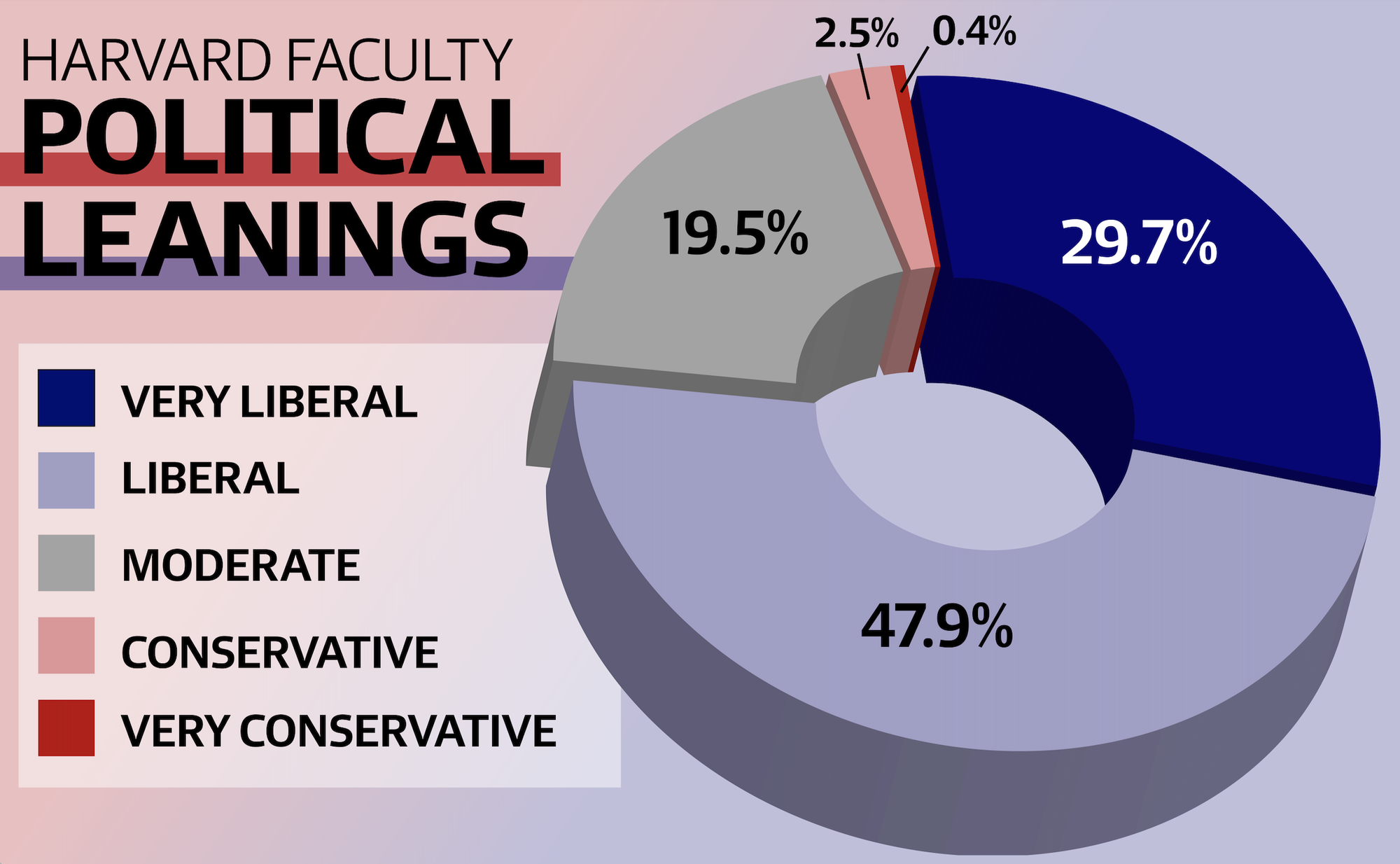

A Conservative Harvard Professors Prescription For Harvards Future

Apr 26, 2025

A Conservative Harvard Professors Prescription For Harvards Future

Apr 26, 2025 -

7 New Orlando Restaurants To Explore Beyond Disney World In 2025

Apr 26, 2025

7 New Orlando Restaurants To Explore Beyond Disney World In 2025

Apr 26, 2025