Voice Assistant Creation Revolutionized: OpenAI's 2024 Developer Conference

Table of Contents

New OpenAI APIs for Enhanced Voice Interaction

OpenAI's 2024 conference showcased a significant leap forward in its APIs, dramatically improving the core components of voice assistant development. These enhancements make creating truly engaging and effective voice assistants far more achievable.

Improved Speech-to-Text Capabilities

The new OpenAI speech-to-text APIs boast significant improvements in accuracy, speed, and language support. This translates to more reliable and responsive voice assistants capable of understanding a wider range of accents, dialects, and even background noise.

- Improved Accuracy: OpenAI claims a 15% reduction in word error rate compared to previous models, achieving near-human levels of accuracy in controlled environments.

- Multilingual Support: The APIs now support over 100 languages, significantly expanding the global reach of voice assistant applications.

- Reduced Latency: Real-time transcription latency has been reduced by 30%, enabling faster and more fluid voice interactions. This is crucial for creating a natural and responsive user experience.

- Enhanced Noise Reduction: Advanced algorithms significantly minimize the impact of background noise, leading to more accurate transcriptions even in noisy environments. This is a critical step forward for real-world applications.

Keywords: Speech-to-text API, real-time transcription, voice recognition, multilingual support, accurate speech recognition, noise reduction.

Advanced Natural Language Understanding (NLU)

OpenAI's advancements in Natural Language Understanding (NLU) are equally impressive. These improvements enable voice assistants to understand context, intent, and sentiment with greater accuracy, leading to more natural and helpful interactions.

- Contextual Understanding: The new APIs can maintain context across multiple turns in a conversation, allowing for more fluid and meaningful dialogues.

- Improved Intent Recognition: The enhanced NLU models achieve higher accuracy in recognizing user intent, even with complex or ambiguous requests.

- Sentiment Analysis: The ability to detect the user's emotional state allows for more empathetic and appropriate responses.

- Entity Extraction: The APIs now perform more precise extraction of key entities from user input, crucial for task completion and information retrieval.

Keywords: Natural Language Processing (NLP), intent recognition, context awareness, conversational AI, sentiment analysis, entity extraction, natural language understanding API.

Text-to-Speech Synthesis Enhancements

The advancements in text-to-speech (TTS) synthesis are remarkable, resulting in more natural-sounding and expressive synthesized voices.

- Improved Naturalness: OpenAI's new TTS models produce speech that is significantly more natural and human-like, enhancing the user experience.

- Voice Cloning: The ability to clone a user's voice opens up new possibilities for personalized voice assistants and accessibility applications.

- Expressive Speech: The new APIs offer greater control over the tone, emotion, and pace of synthesized speech, allowing for more engaging and expressive interactions.

- Multiple Voice Styles: Developers now have access to a wider range of voice styles and tones, catering to diverse user preferences and application requirements.

Keywords: Text-to-speech API, voice cloning, natural language generation, speech synthesis, expressive speech synthesis, natural sounding voice.

Streamlined Development Tools and Resources

OpenAI has not only improved its APIs but also streamlined the development process, making it easier than ever to build voice assistants.

Simplified SDKs and Libraries

Integrating OpenAI's APIs into existing projects is now significantly easier thanks to simplified SDKs and libraries available for various programming languages.

- Supported Languages: SDKs are readily available for popular languages such as Python, JavaScript, Java, and C++.

- Simplified Code: The APIs are designed with simplicity in mind, requiring minimal code to implement core functionalities.

- Comprehensive Documentation: Well-documented APIs and SDKs make integration straightforward, even for developers with limited experience.

Keywords: Software Development Kit (SDK), API integration, developer tools, programming languages, easy integration, voice assistant SDK.

Comprehensive Documentation and Tutorials

OpenAI provides comprehensive documentation, tutorials, and community support to assist developers throughout the development lifecycle.

- Detailed Documentation: OpenAI's documentation covers all aspects of API usage, providing clear explanations and examples.

- Interactive Tutorials: Step-by-step tutorials guide developers through the process of building voice assistant applications.

- Active Community Support: A vibrant community forum provides a platform for developers to share knowledge, ask questions, and collaborate.

Keywords: Developer documentation, tutorials, community support, voice assistant development resources, openAI developer community.

Ethical Considerations and Responsible AI in Voice Assistant Creation

OpenAI emphasizes responsible AI development and addresses critical ethical concerns in voice assistant creation.

Bias Mitigation Strategies

OpenAI is actively working to mitigate bias in its models and provides guidance to developers on addressing potential biases in their applications.

- Bias Detection Techniques: OpenAI employs advanced techniques to identify and mitigate biases in its models.

- Ethical Guidelines: Comprehensive ethical guidelines provide developers with best practices for building fair and unbiased voice assistants.

Keywords: Responsible AI, ethical considerations, bias detection, fairness in AI, ethical AI development, mitigating bias.

Privacy and Security Best Practices

Protecting user privacy and data security is paramount. OpenAI highlights measures to ensure responsible data handling.

- Data Encryption: Robust encryption methods protect user data throughout the development and usage lifecycle.

- User Consent: OpenAI emphasizes the importance of obtaining explicit user consent for data collection and usage.

- Data Anonymization: Techniques for data anonymization help protect user privacy while enabling data analysis.

Keywords: Data privacy, data security, user consent, responsible data handling, data protection, secure voice assistant.

Conclusion

OpenAI's 2024 Developer Conference has undeniably revolutionized voice assistant creation, providing developers with the tools and resources to build more sophisticated, intuitive, and ethically responsible voice assistants. The advancements in speech-to-text, natural language understanding, and text-to-speech, combined with streamlined development tools, mark a significant leap forward in the field. By leveraging these new APIs and best practices, developers can unlock the full potential of voice technology and create truly transformative voice assistant experiences. Dive into OpenAI's resources and start building your next-generation voice assistant today!

Featured Posts

-

Individual Investors Vs Professionals Who Wins When Markets Fall

Apr 28, 2025

Individual Investors Vs Professionals Who Wins When Markets Fall

Apr 28, 2025 -

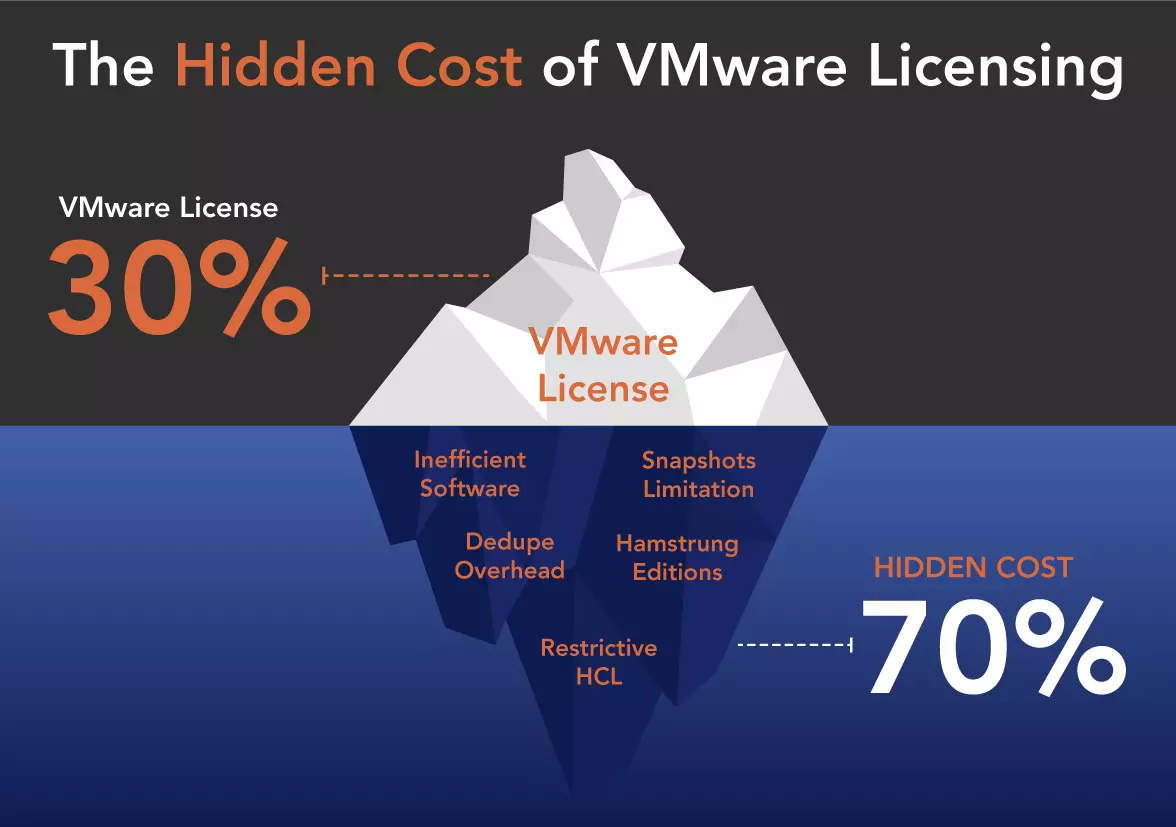

Sharp Increase In V Mware Costs At And T Challenges Broadcoms Pricing

Apr 28, 2025

Sharp Increase In V Mware Costs At And T Challenges Broadcoms Pricing

Apr 28, 2025 -

Chinas Tariff Policy Shift Implications For Specific Us Products

Apr 28, 2025

Chinas Tariff Policy Shift Implications For Specific Us Products

Apr 28, 2025 -

Understanding High Stock Market Valuations A Bof A Analysis

Apr 28, 2025

Understanding High Stock Market Valuations A Bof A Analysis

Apr 28, 2025 -

Us Stock Market Rally Driven By Tech Giants Tesla In The Lead

Apr 28, 2025

Us Stock Market Rally Driven By Tech Giants Tesla In The Lead

Apr 28, 2025

Latest Posts

-

Us Stock Market Rally Driven By Tech Giants Tesla In The Lead

Apr 28, 2025

Us Stock Market Rally Driven By Tech Giants Tesla In The Lead

Apr 28, 2025 -

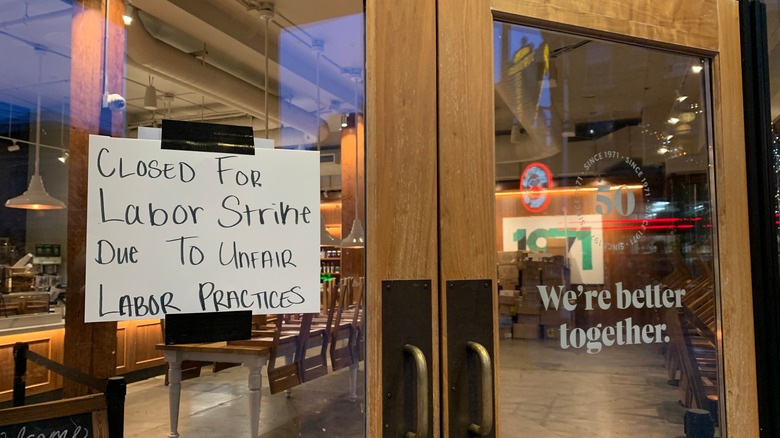

Unionized Starbucks Employees Turn Down Companys Guaranteed Raise

Apr 28, 2025

Unionized Starbucks Employees Turn Down Companys Guaranteed Raise

Apr 28, 2025 -

Starbucks Workers Reject Companys Pay Raise Offer

Apr 28, 2025

Starbucks Workers Reject Companys Pay Raise Offer

Apr 28, 2025 -

Starbucks Union Rejects Companys Proposed Wage Increase

Apr 28, 2025

Starbucks Union Rejects Companys Proposed Wage Increase

Apr 28, 2025 -

The Proposed Broadcom V Mware Acquisition An Extreme Price Surge

Apr 28, 2025

The Proposed Broadcom V Mware Acquisition An Extreme Price Surge

Apr 28, 2025